Marat Khamadeev

Marat Khamadeev

Matrix completion problem will improve self-supervised learning

Machine learning methods are traditionally classified into one of two categories: supervised and unsupervised learning, or some intermediate variant. In the first case, the training data has given labels, and the model must learn this dependency. In the second case, the model only needs to effectively describe how unlabeled data is arranged in the feature space, such as clustering the data.

However, there is often a need to identify dependencies on a large amount of unlabeled data, for which creating labels is too costly. In this case, self-supervised learning (SSL) comes to the rescue — a new approach that has been actively developed in recent years.

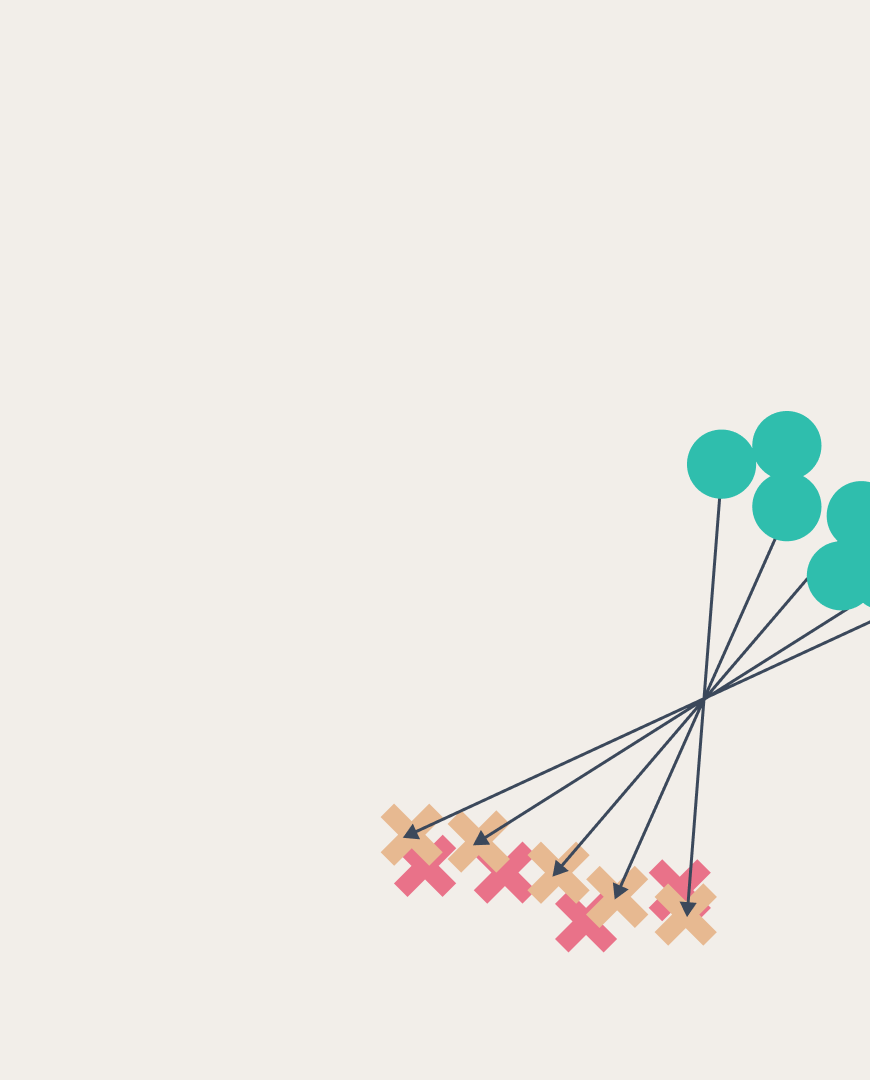

It is based on automatically generating labels based on the internal structure of data or some basic knowledge related to it. The model is applied to a certain pretext task, as a result of which it learns a good representation of the data. In the future, a pre-trained model can more efficiently solve the downstream task.

Most SSL methods rely on a heuristic approach to solving the problem, so their theoretical justification could help avoid potential errors. Scientists from Germany and Russia, led by the AIRI CEO and Skoltech Professor Ivan Oseledets, have directed their efforts towards this goal. They start with the assumption that multidimensional data lies along a hidden smooth low-dimensional manifold inside a high-dimensional space. Smoothness allows for the introduction of the Laplace-Beltrami differential operator, which generalizes the more well-known Laplace operator for Riemannian manifolds.

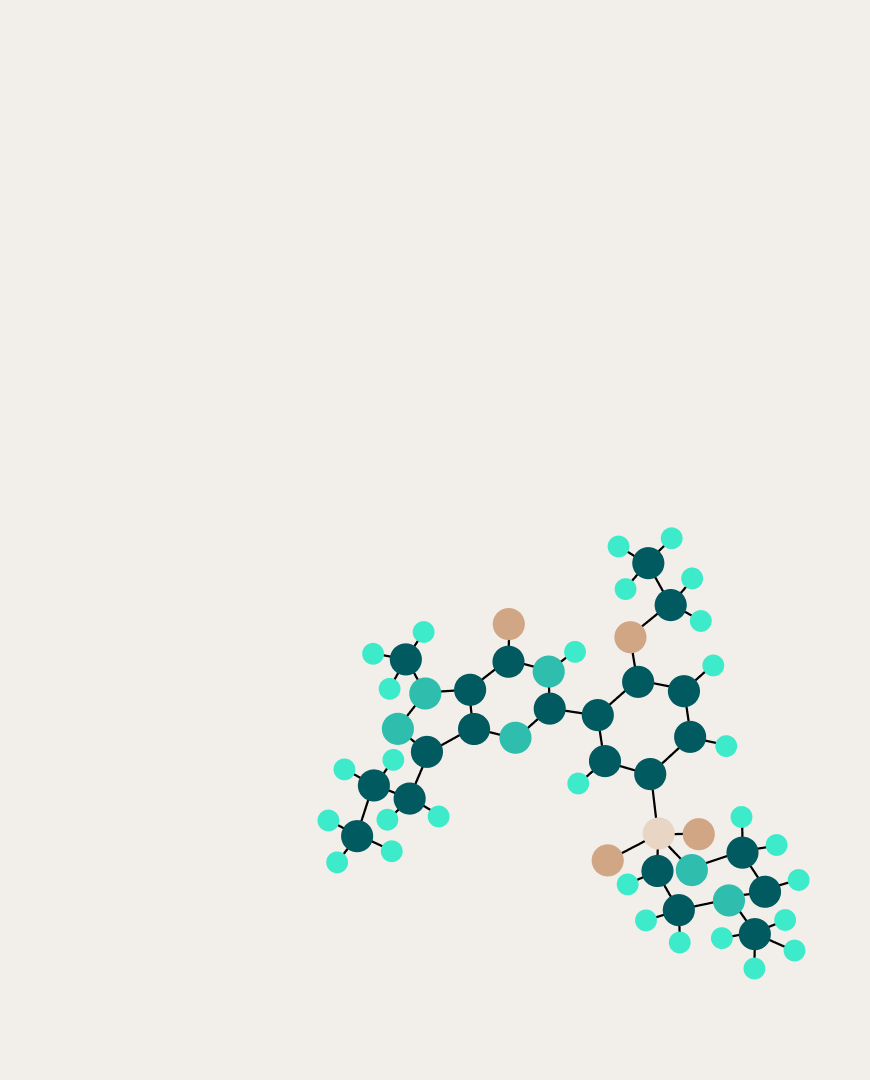

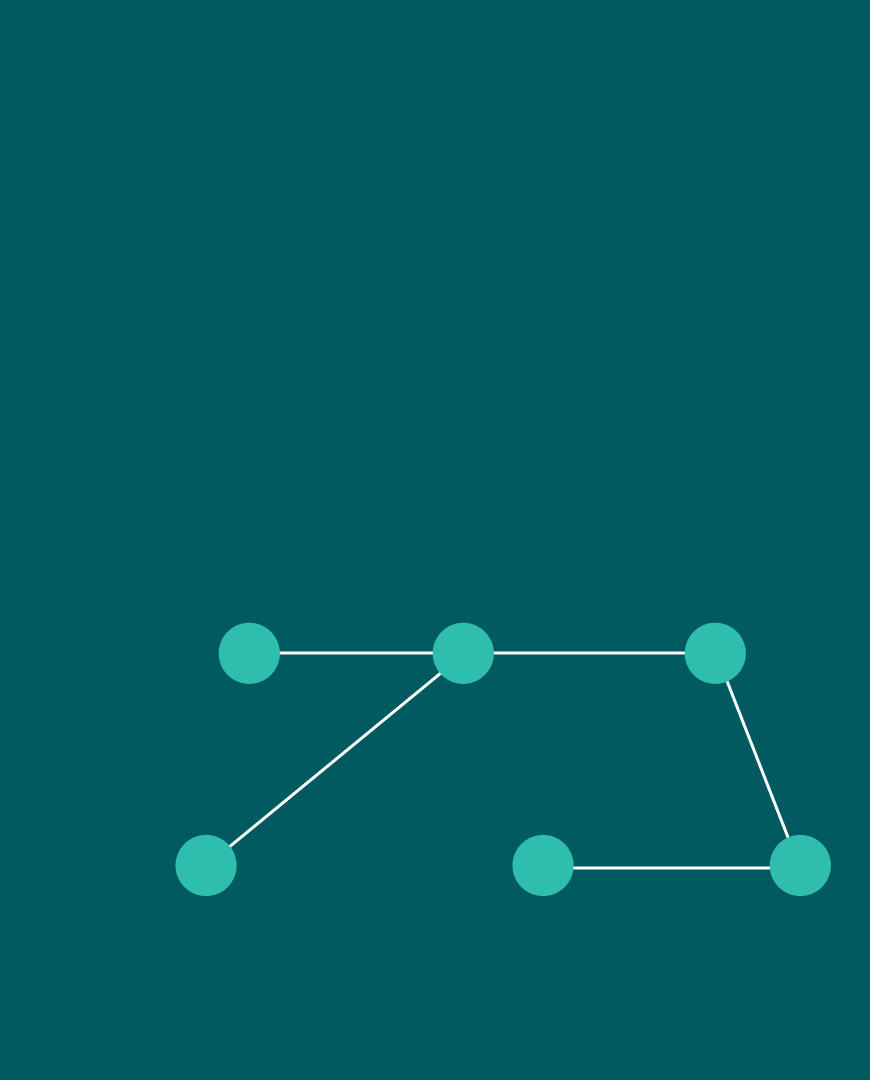

In practice, researchers work with a finite (although large) number of points on the manifold. Nevertheless, the adopted hypothesis allows for approximating the Laplace operator with graphs or meshes and representing it using vectors and matrices. The authors showed that learning optimal representations is equivalent to a trace maximization problem, a form of eigenvalue problem. The team demonstrated that three popular SSL methods (SimCLR, BarlowTWINS, VICReg) fall under this formulation.

Developing their formalism, the researchers were able to answer the question of how SSL methods cope with noise or lack of data, which often occurs in practice, and why, despite this, they converge. It turned out that from the point of view of the Laplace operator, SSL methods solve a low-rank matrix completion problem. According to the scientists, this discovery will allow optimizing approaches to self-supervised learning and expanding their application to more areas of machine learning.

More details about this study can be found in the article published in the proceedings of the NeurIPS 2023 conference.