Marat Khamadeev

Marat Khamadeev

Scientists made domain adaptation for generative adversarial networks five thousand times faster

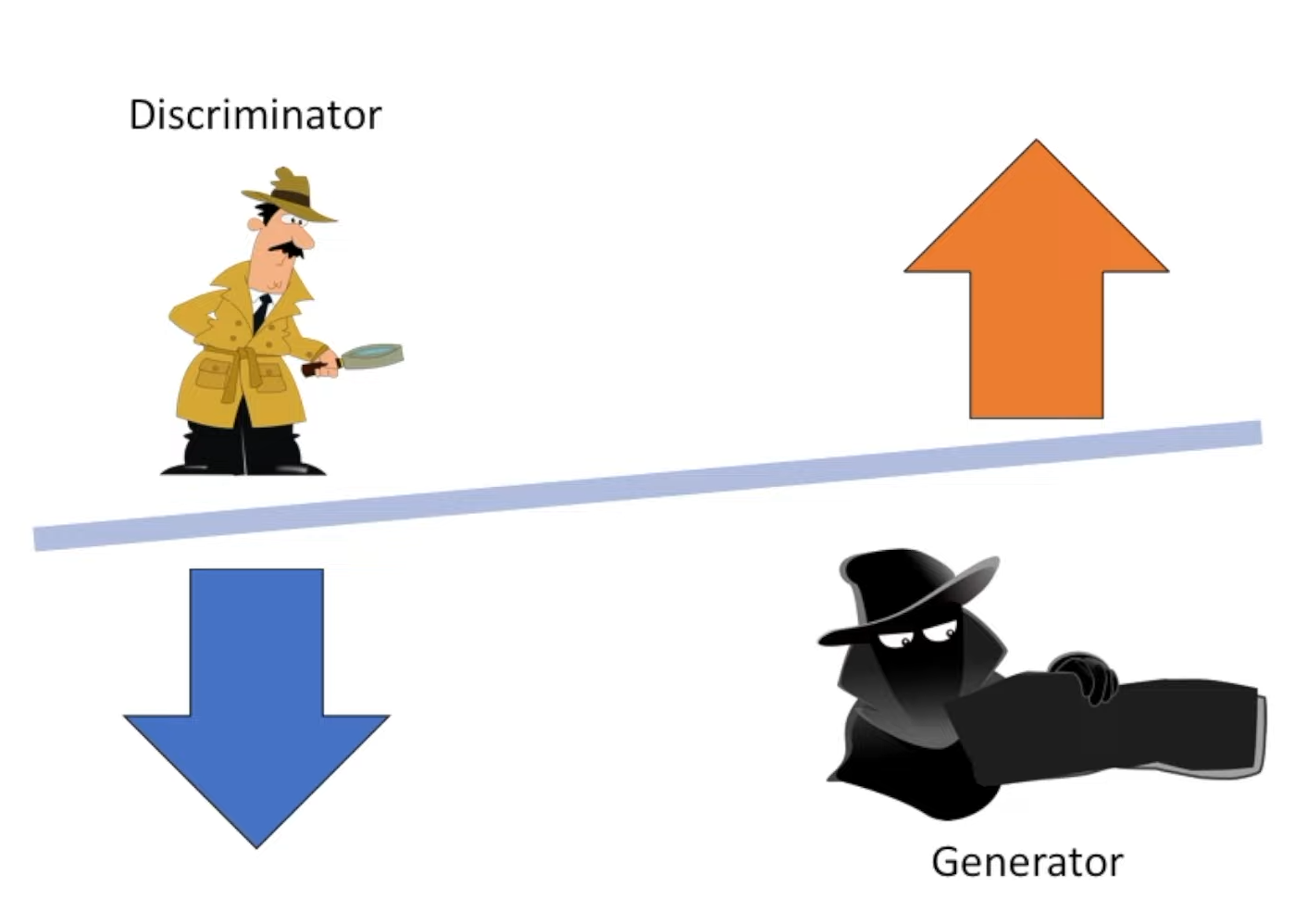

Contemporary generative adversarial networks (GANs) show remarkable performance in modeling image distributions and have applications in a wide range of computer vision tasks: image enhancement, editing, image-to-image translation, etc. GANs consist of two neural networks — generator and discriminator — configured to act against each other. The first generates new samples based on the training set while the second tries to discriminate that it is a fake. During training the overall objective function is minimized by the generator and maximized by the discriminator. This adversarial game allows the generator to get better at faking samples to the point that they are indistinguishable from real samples at the end of training.

Credit: WelcomeAIOverlords / YouTube

Training of modern GANs requires tens or even hundreds of thousands of samples. This limits its applicability only to domains that are represented by a large set of images. The mainstream approach to sidestep this limitation is transfer learning (TL), i.e., fine-tuning the generative model to a domain with few samples starting with a pretrained source model.

The standard approach of GAN TL methods is to fine-tune almost all weights of the pretrained model. It can be reasonable in the case when the target domain is very far from the source one, e.g., when one adapts the generator pretrained on human faces to the domain of animals or buildings. However, there is a wide range of cases when the distance between data domains is not so far. Often target domains are similar to the source one and differ mainly in texture, style, geometry while keep the same content like faces or outdoor scenes. For such cases it seems redundant to fine-tune all weights of the source generator.

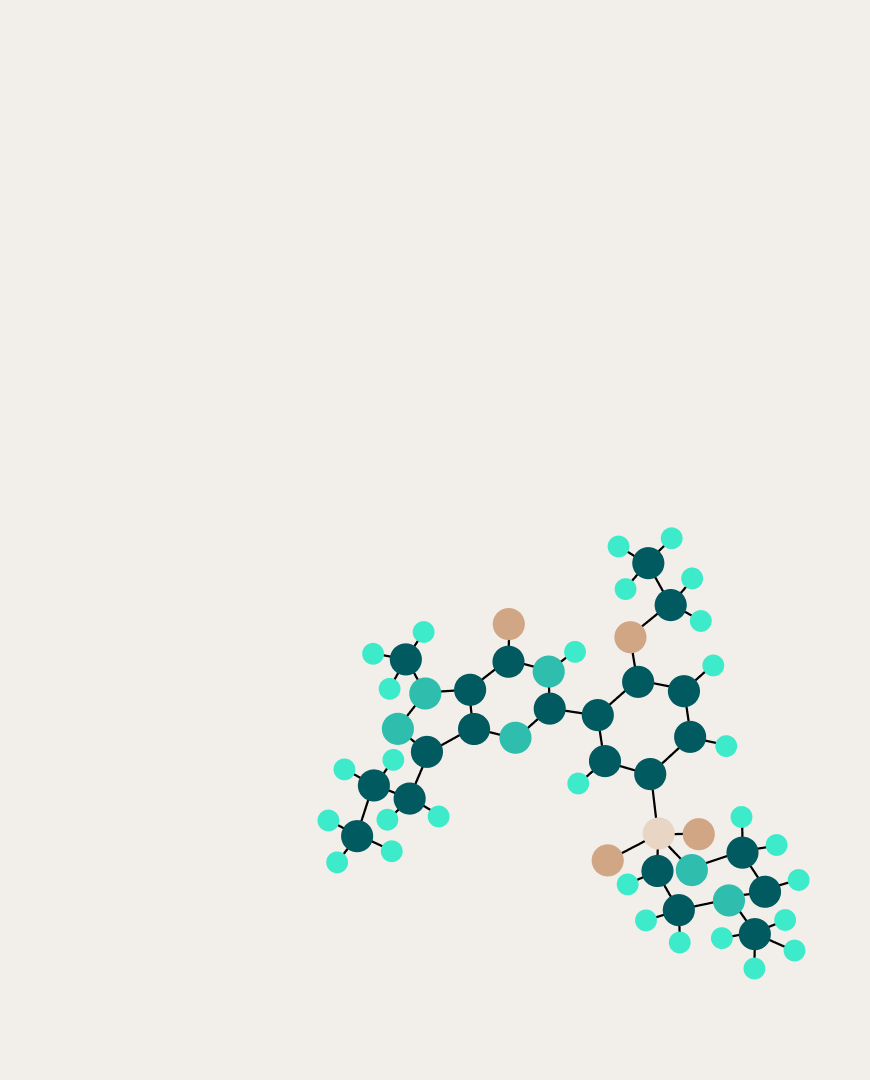

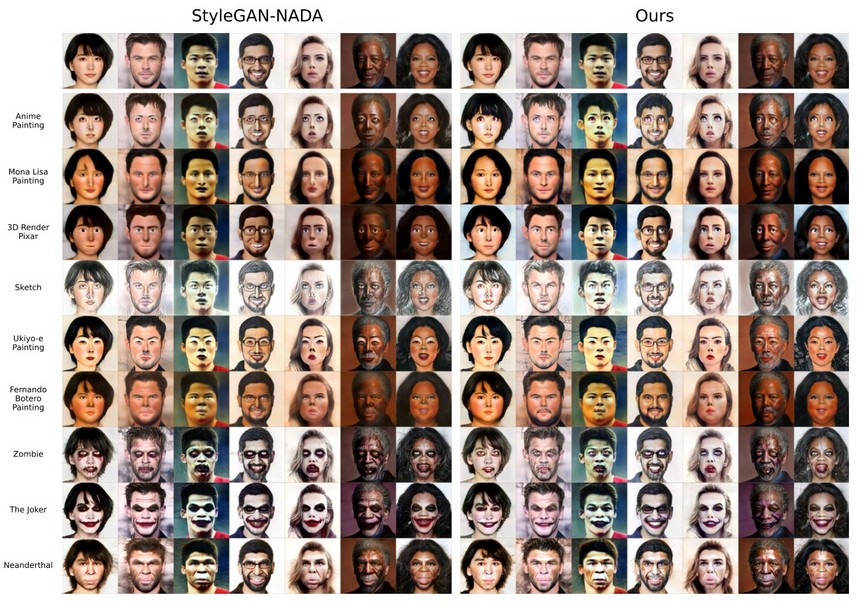

This fact motivated Dmitry Vetrov and his colleagues from HSE, MIPT and AIRI to find a more efficient and compact parameter space for domain adaptation of GANs. Their idea is to optimize for each target domain only a single vector that is called domain vector. This vector may be incorporated into an architecture through the modulation operation at each convolution layer. Applying this parameterization to the StyleGAN2 model allowed scientists to reduce the dimension of the vector from 30 million to 6 thousand that is 5 thousand times less than the original weights space. They showed quantitatively and qualitatively that the new approach can achieve the same quality as optimizing all weights of the StyleGAN2.

Comparison of training setups. The top row represents the real images embedded into StyleGAN2 latent space which latents are then used for the inference of the adapted models. The left block represents results obtained from the original StyleGAN-NADA method that optimize all weights of StyleGAN2. The right block represents results of our method that fine-tunes only the domain vector for each domain

If only such a domain vector is trained, the domain of the generated images changes just as well as if we train all the parameters of the neural network. This drastically reduces the number of optimized parameters, since the dimension of such a domain vector is only 6000 which is orders of magnitude less than the 30 million weights of our generator. It also allowed us to propose a model — the HyperDomainNet — that predicts such a vector only from the description of the target domain.

Such a significant reduction made it possible to solve the problem of multi-domain adaptation of GANs, i.e., when the same model can adapt to multiple domains depending on the input query. Typically, this problem is tackled by previous methods just by fine-tuning separate generators for each target domain independently. In contrast, authors propose to train a hyper-network that predicts the vector for the StyleGAN2 depending on the target domain. They called this network as HyperDomainNet. The immediate benefits of multi-domain framework consist of reducing the training time and the number of trainable parameters because instead of fine-tuning n separate generators one trains one HyperDomainNet to adapt to n domains simultaneously. Another advantage of this method is that it can generalize to unseen domains (i.e., ones which were not presented in training) if n is sufficiently large. Authors empirically show this effect.

More details can be found in the paper published in the Proceedings of the NeurIPS 2022 conference.