Marat Khamadeev

Marat Khamadeev

Sphere-guided presentation improved 3D reconstruction using neural networks

Three-dimensional reconstruction of objects plays an important role in various fields, ranging from medicine and architecture to archaeology and the entertainment industry. This refers to the process of digitizing the shape and appearance of various objects. In most cases, it is important to keep the object being digitized untouched, which is why engineers and scientists are actively developing methods of remote (or passive) reconstruction based on the analysis of images taken by cameras.

The classical approach to solving this problem is multi-view stereo (MVS) reconstruction, which estimates the underlying scene geometry in the form of a point cloud using a photometric consistency between the different views. Despite its popularity, such reconstruction faces difficulties when surfaces are significantly non-Lambertian and textureless.

In recent years, approaches in which the scene is reconstructed employing implicit neural surfaces have been less prone to these problems. Among other things, they allow for the recovery of a connected surface in the explicit form, as well as obtaining renders of impressive quality.

Scientists at Skoltech, led by Evgeny Burnaev, who also heads the "Learnable Intelligence" group at AIRI, have directed their efforts toward developing such methods. The researchers were interested in improving the efficiency of 3D reconstruction using implicit neural surfaces.

Currently, leading methods employ neural signed distance fields (SDFs) trained through differentiable volumetric rendering, based on ray marching. Traditional ray marching involves emitting rays from the observation point towards the scene and then summing up (alpha compositing) predicted color values concerning the density at sampled points. The rendering algorithm assumes uniform sampling of points along the ray.

The authors proposed to improve this approach by adding a discrete representation of the scene based on a set of spheres. The new algorithm replaces uniform sampling of points along the ray with sampling of points inside the intersection of the ray with spheres. To make this technique work, scientists suggested a method for joint optimization of sphere and surface coordinates. Additionally, they introduced a point resampling scheme, which prevents the spheres from getting stuck in the local minima, and a repulsion mechanism that ensures a high degree of exploration of the reconstructed surface.

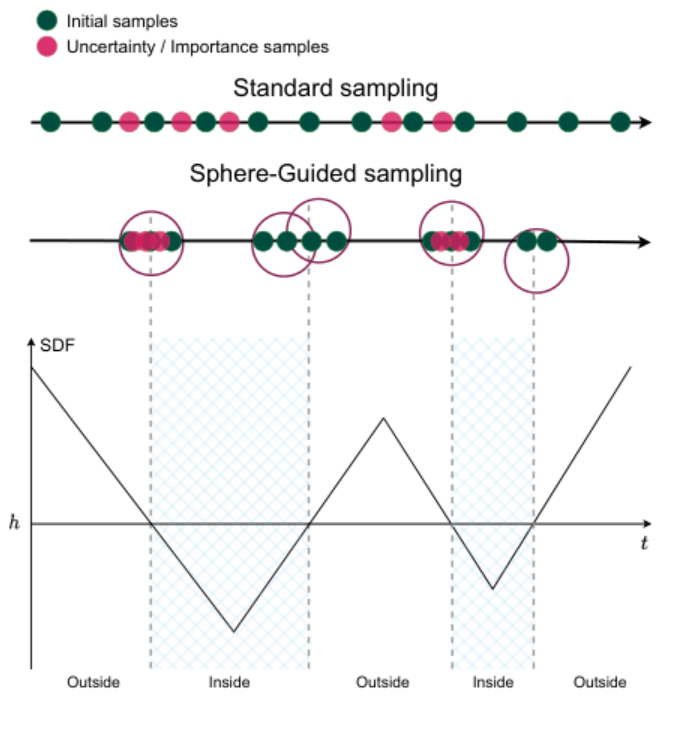

Traditional sampling of points along a ray and sampling using spheres. Below is a graph of the signed distance function

The authors of the study conducted a series of experiments with three popular benchmarks for 3D reconstruction of synthetic and real objects, namely DTU MVS, Blended-MVS, and Realistic Synthetic 360, to evaluate the effectiveness of the new approach. They examined how the efficiency of this procedure changes for four known methods today: UNISURF, VolSDF, NeuS, and NeuralWarp — by incorporating sphere-guided training into them.

Qualitative results on the Realistic Synthetic 360 dataset

The experiment showed that adding a sphere-based representation can efficiently exclude the empty volume of the scene from the volumetric ray marching procedure without additional forward passes of the neural surface network, which leads to an increased fidelity of the reconstructions compared to the base systems. The authors note that, despite the increase in accuracy metrics, the chosen approach in some cases can introduce artifacts into the reconstruction or, conversely, miss rays that do not intersect the cloud of spheres in a certain volume.

Details of the study can be found in the paper accepted for the CVPR 2023 conference.