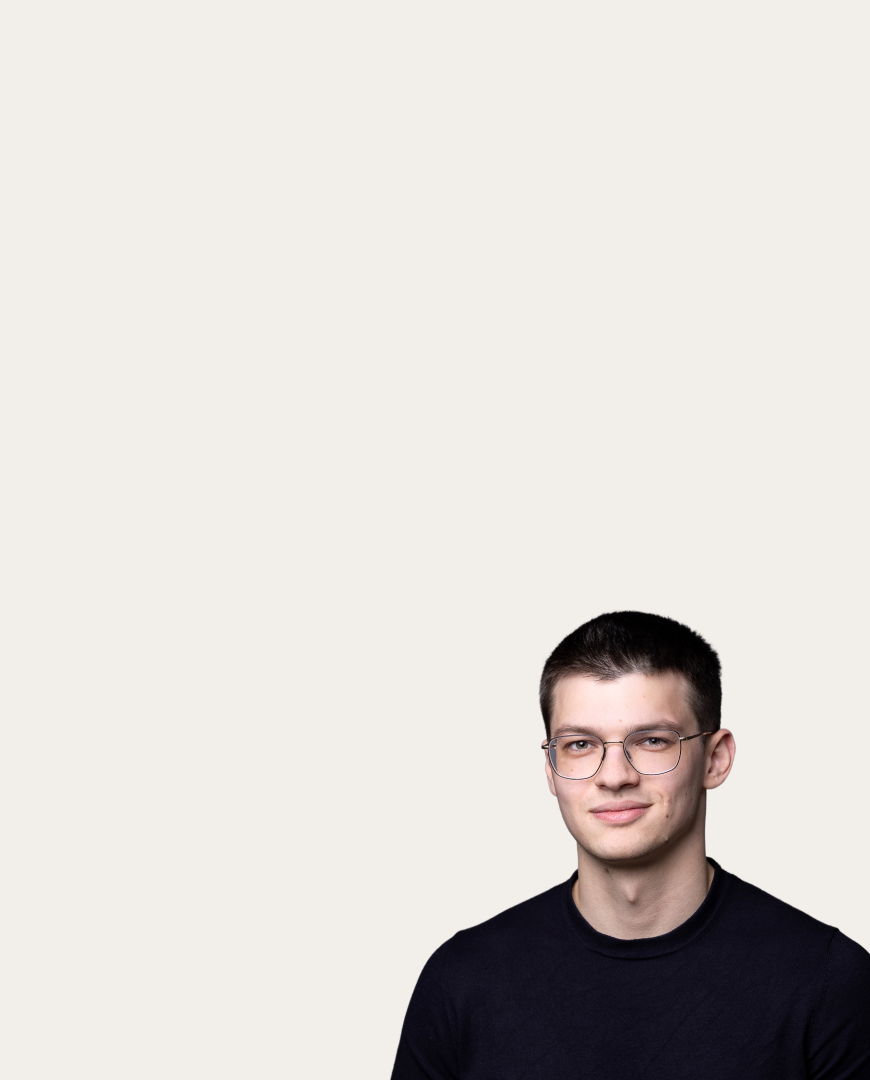

Marat Khamadeev

Marat Khamadeev

Gaussian mixtures helped to improve the learning of object-centric scene representation

The use of neural networks has led to significant progress in the field of computer vision and other areas where segmenting objects in complex data, such as two-dimensional images, is required. Today, multi-layer convolutional networks are used for this purpose, where the level of abstraction increases as the layer number grows: from simple edges to entire objects. Learning good representations for the latter is the main goal of models with object-centric architectures.

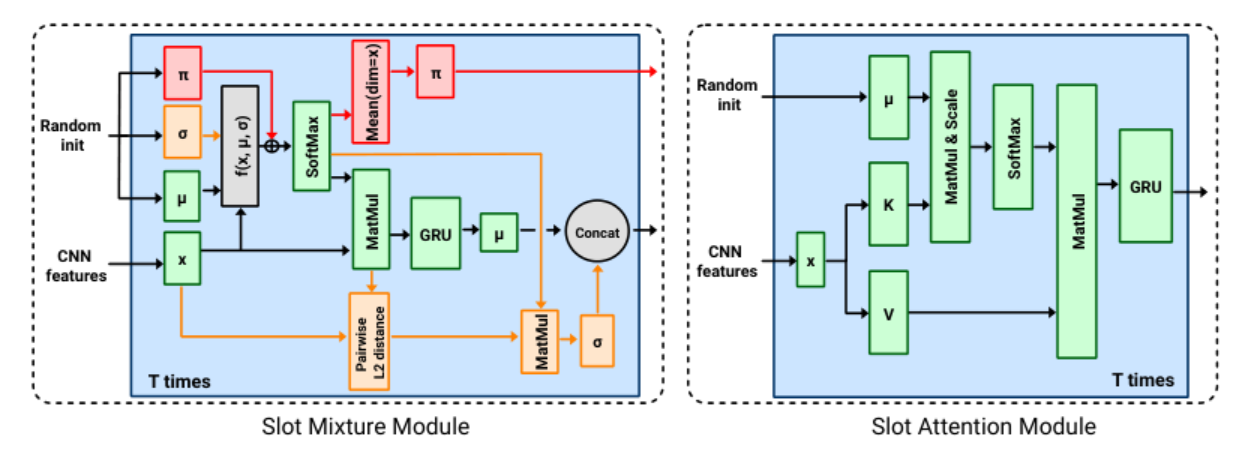

An important step towards object-centric image representations is the clustering of learned features so that they correspond to a single object. The most common and effective way to do this is considered to be the Slot Attention (SA) method, which maps the input feature vector received from the convolutional encoder to a fixed number of output feature vectors, referred to as slots. Training such a model results in assigning a specific slot to each object in the scene, with some slots remaining empty if there are few objects.

From an algorithmic point of view, the clustering performed in the SA method can be seen as an analog of the soft k-means method with learning a recurrent function using a Gated Recurrent Unit (GRU) and a multi-layer perceptron (MLP). However, this is neither the only nor always the most optimal clustering algorithm. A team of researchers from AIRI, FRC CSC RAS, and MIPT proposed replacing the k-means method with a Gaussian Mixture Model when learning slot representations of scene objects.

According to the authors' idea, in this approach, slots are formed not only based on cluster centers but also using distances between clusters and assigned vectors, leading to a more expressive slot representation. The new model is called the Slot Mixture Module (SMM).

To evaluate the effectiveness of the proposed innovation, the team conducted a series of experiments comparing the SA approach and its equivalent, SMM, using the same encoders and datasets across a wide range of tasks: image reconstruction, set property prediction, object discovery, and concept sampling. The authors also compared with pure clustering methods — k-means and Gaussian mixtures, respectively — by disabling the GRU and MLP in both slot-based approaches. The experiments showed that using SMM instead of SA improves performance in object-centric tasks, achieving state-of-the-art results in set property prediction and even surpassing a specialized model.

The research was presented at the ICLR 2024 conference, and the paper has been published in its proceedings.