Marat Khamadeev

Marat Khamadeev

Researchers trained diffusion models to edit images more accurately and faster

In recent years, diffusion models have proven their success in text-to-image tasks, meaning creating images based on textual descriptions. However, in addition to drawing from scratch, training such models to edit existing images would be useful. Despite the progress made, researchers of new models still find it challenging to balance the quality of editing and preserving the parts of the image that should not be altered.

There are various approaches to this task. For example, researchers use fine-tuning of the model on the input image to preserve its structure and all necessary details during editing. It is also possible to modify the diffusion algorithm either at the noise addition stage or at the reconstruction stage. However, none of the existing approaches achieve an acceptable compromise between speed, versatility, and quality.

Researchers from the "Controllable Generative AI" group at the FusionBrain laboratory in AIRI, along with colleagues from the University of New South Wales and the Constructor University in Bremen, proposed their method to achieve such a compromise. As a starting point, they chose to cache the internal representations of the original images during the forward stage and substitute them during editing in the backward stage. However, instead of explicit substitution, the scientists proposed adding a special term to the loss function that guides the generation in the desired direction. The new method is called Guide-and-Rescale.

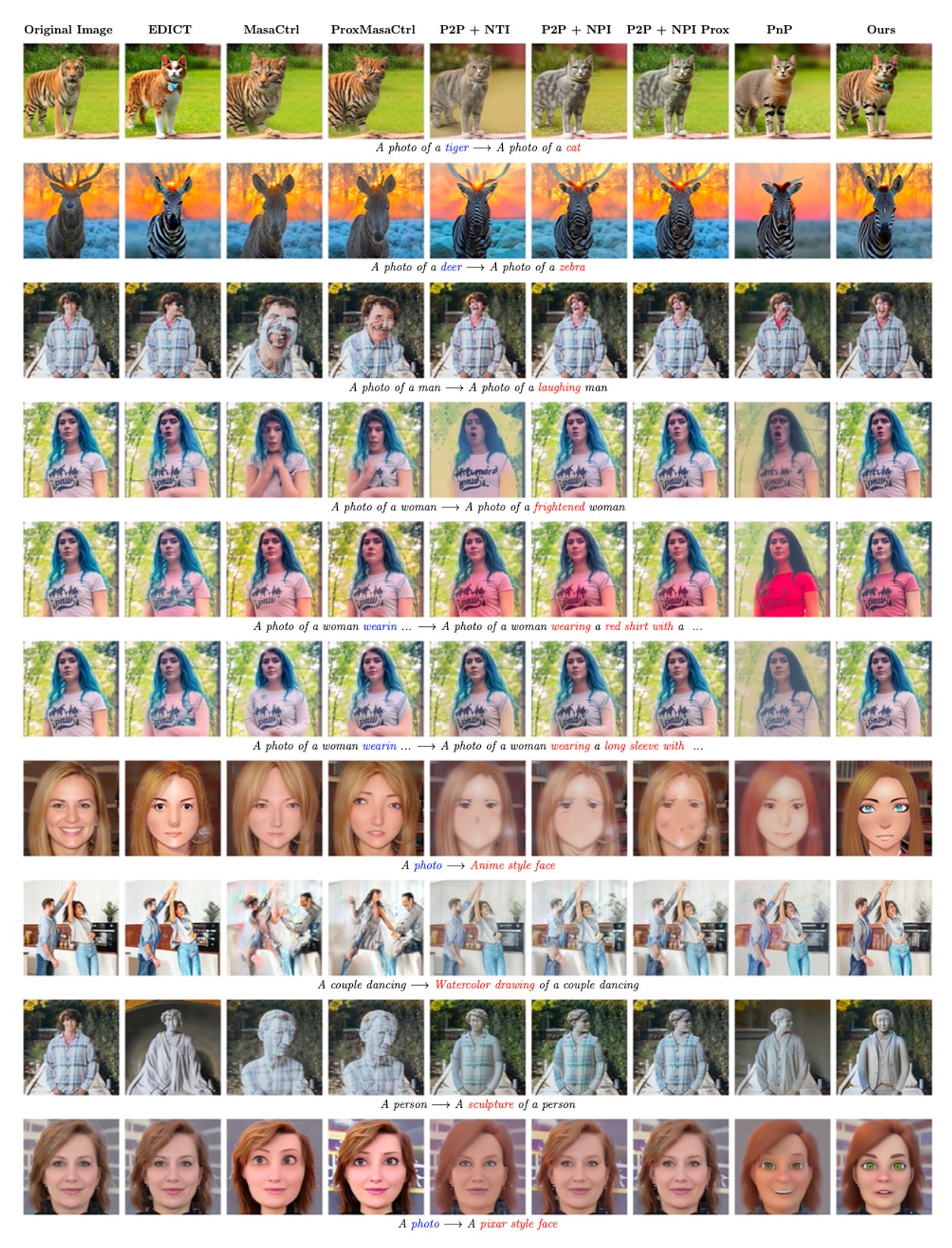

The authors tested their method on four different tasks: local changing a person's appearance and clothing, changing a person’s emotions, replacing animals, and global stylization. They also subjected several existing approaches to editing available today to the same tests. During the experiments, the Guide-and-Rescale approach demonstrated more stable results and allowed for a better compromise between editing quality and preserving the structure of the original image.

Visual comparison of the new method with baselines over different types of editing

Additionally, researchers conducted a human study. They surveyed 62 users who answered a total of 960 questions. This phase also confirmed that Guide-and-Rescale yields better editing quality while preserving areas that should remain unchanged.

The paper describing the method and results was presented at the ECCV 2024 conference in Milan. The group has released the code on GitHub and a demo on HuggingFace.