Anton Antonov

Anton Antonov

Realistic click simulation will help to improve interactive segmentation models

Interactive object segmentation on images — that is, defining their boundaries — plays an important role in a variety of fields, from medical imaging to special effects creation. Modern interactive segmentation methods aim for maximum automation of this task, utilizing a wide range of machine learning architectures.

For the correct evaluation of such methods, developers need to collect information about how real people interact with them. Typically, this interaction involves a person indicating the object of interest through mouse clicks or screen taps, while the algorithm attempts to segment it correctly. In case of an error, the user is required to point out the area that the algorithm has incorrectly highlighted. In order to understand how well the methods work, realistic models of how people click on objects are needed, but there are not many existing strategies for modeling such behavior.

Researchers from the AIRI, led by Vlad Shakhuro, head of the “Robotics” group, conducted a large-scale study to understand how people interact with interactive segmentation methods when they want to select an object. To do this, they combined several classic datasets for evaluating these methods and collected a total of 475,000 user clicks and taps through a special web interface. The dataset was named RClicks.

In the next phase, the authors trained a clickability model that predicts the probability of a user clicking on each point of the input image. To achieve this, they constructed special probability maps based on the normalized and processed data from RClicks.

The new model paved the way for evaluation of existing interactive segmentation methods (RITM, SimpleClick, SAM, and others) using an approach that is closer to human behavior than the commonly used one — clicking at the center of the largest error area. It turned out that the traditional (baseline) strategy underestimates the actual annotation time. Moreover, this time varies significantly among different user groups. It was also found that there is no segmentation method that is both effective and robust across all datasets.

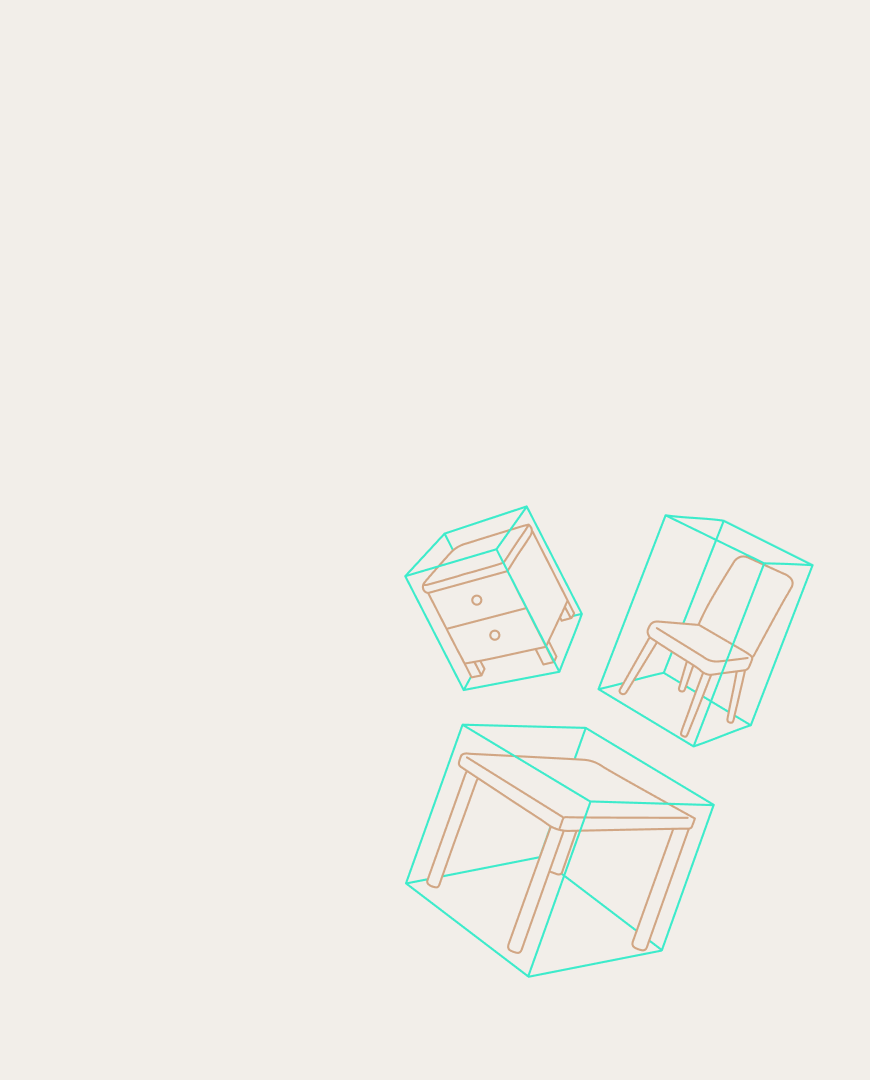

Examples of averaged clicks masks (black-and-white images) obtained on complex images using different methods: RITM, SimpleClick, SAM, SAM-HQ, respectively. Green dots indicate actual clicks.

The benchmark and model created by the authors will allow other researchers to identify the most challenging examples from the sample, assess more realistic annotation times for new datasets, and choose the most optimal annotation method in each specific case when a small labeled subset of the dataset is available.

The paper with details has been published in the proceedings of the NeurIPS 2024 conference, and the code and data are available on GitHub.