Vera Soboleva

Vera Soboleva

Diffusion models can now be effectively fine-tuned using a single image

State-of-the-art diffusion models capable of generating high-quality images are typically trained on large and diverse datasets. However, practical applications often require more personalized outputs, such as generating a specific object based on a limited number of training images.

This challenge can be addressed by fine-tuning the model on a specialized dataset. For large models, however, full fine-tuning is computationally expensive due to the need to update all model weights. A solution is provided by Low-Rank Adaptation (LoRA), a technique where weight update matrices are decomposed into a product of low-rank matrices.

LoRA has proven effective in real-world applications, offering speed, efficiency, and flexibility in fine-tuning large models. Nevertheless, it still suffers from overfitting when adapting models to extremely small datasets. From a user-experience standpoint, it would be highly convenient to customize a diffusion model using just a single image of the target object. In practice, however, this often leads to poor generalization and a lack of diversity: generated objects often appear in the same pose and background as seen in the training images, limiting their adaptability to new contexts described in text prompts.

Researchers from AIRI, HSE, and MSU addressed this issue. They hypothesized that overfitting primarily occurs during fine-tuning at the noisiest timesteps of the diffusion process. At these steps, the model is trained to recover the training images from heavily corrupted inputs, which inadvertently restricts its capacity to generate diverse and flexible scenes. Yet, these noisy steps are crucial for preserving the shape and proportions of the target concept.

Leveraging this insight, the researchers developed T-LoRA, a novel framework enhancing standard LoRA. At its core is the idea that the intensity of the training signal should depend on the step within the diffusion process. The authors implemented adaptive masking to reduce the training signal during early, noisier diffusion steps, while prioritizing it during later ones. To ensure the effectiveness of such masking, they introduced a special modification of LoRA that maintains orthogonality of adapter columns throughout training.

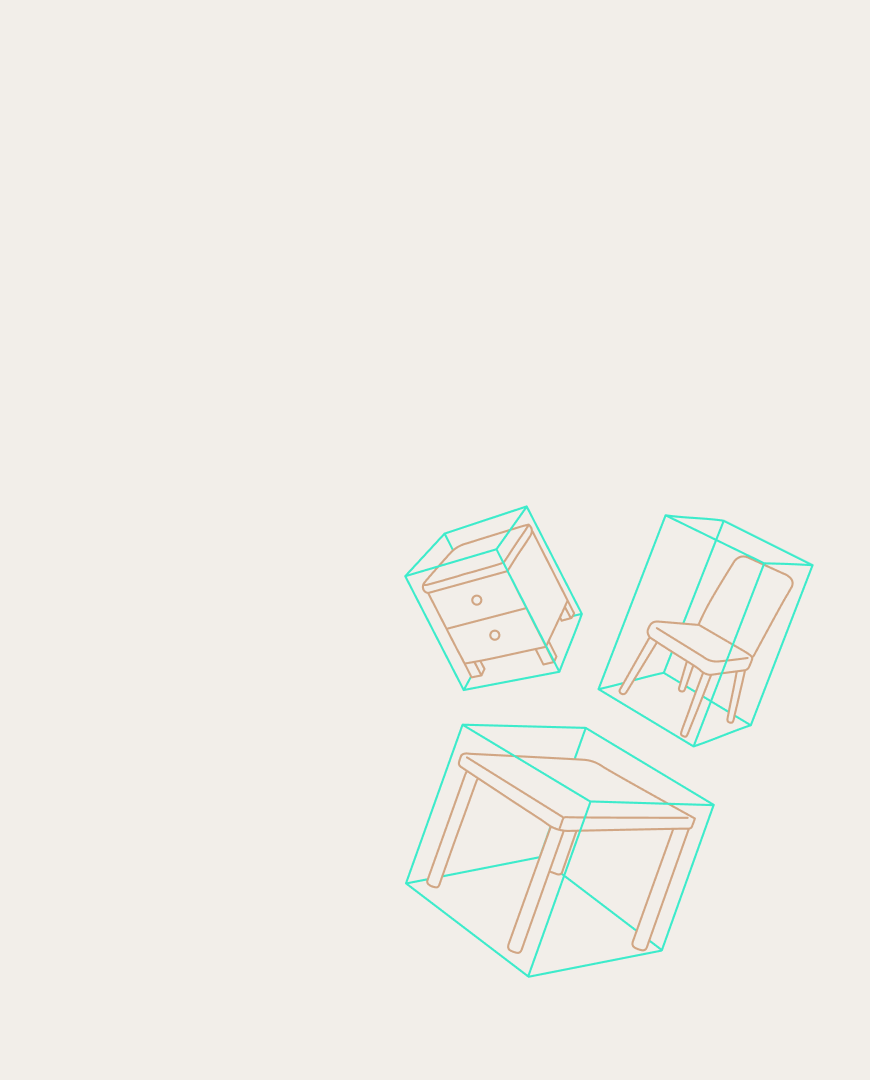

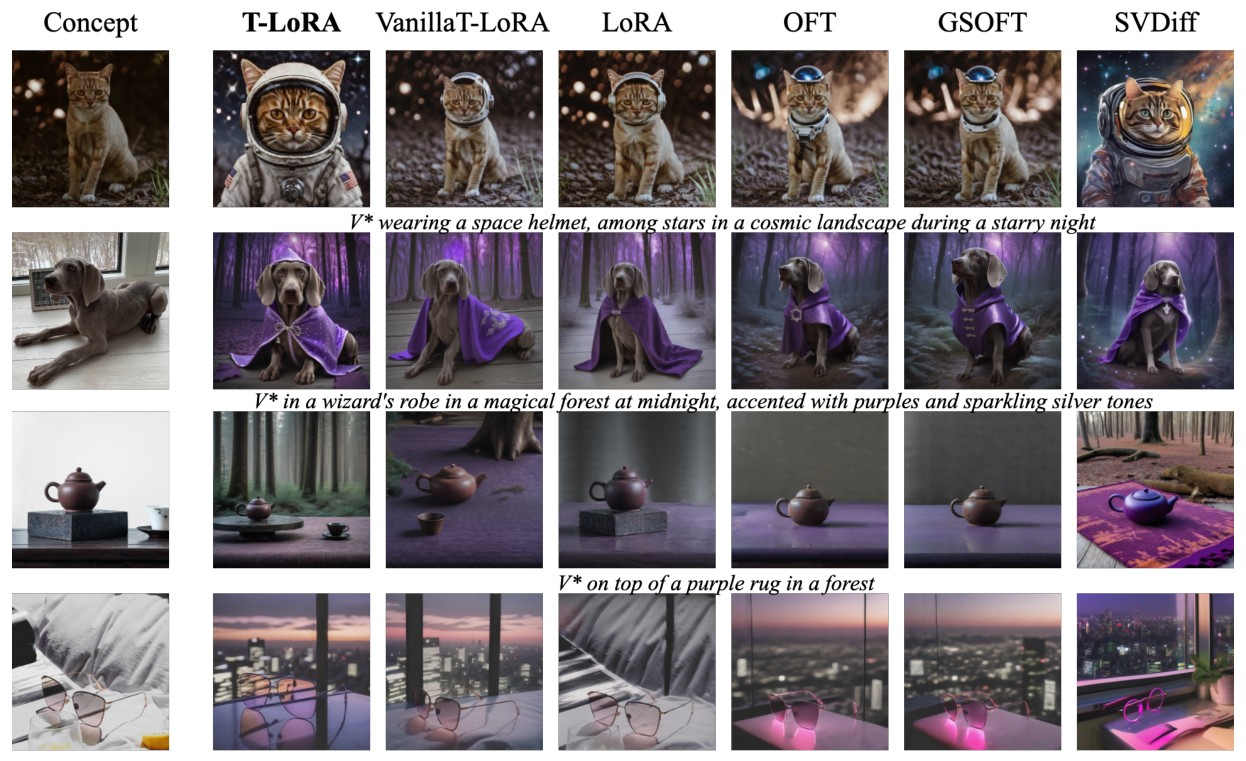

To evaluate their method, the researchers conducted experiments on a wide variety of objects, including pets, toys, interior objects, accessories, and more. Using T-LoRA, they fine-tuned the Stable Diffusion-XL model on just one image per object, comparing the results with standard LoRA and several orthogonal fine-tuning methods. Experiments demonstrated that T-LoRA achieves better prompt alignment and produces significantly more diverse object poses.

Comparison of T-LoRA with its non-orthogonal variant (Vanilla T-LoRA), standard LoRA, and other lightweight fine-tuning methods

More details can be found in the research paper; the T-LoRA code is available on GitHub.