Marat Khamadeev

Marat Khamadeev

Researchers made entropic optimal transport scalable and theoretically grounded

Attempting to understand the behavior of systems consisting of many elements inevitably requires a probabilistic approach. A system evolves from one state to another, and this process corresponds to the transformation of probability distributions that describe the properties of the elements. This picture describes various things, from the diffusion of atoms and molecules in gas to the conversion of white noise into meaningful images produced by generative models of artificial intelligence. Because of this similarity, there is a whole class of models in machine learning called diffusion models.

To enable faster inference of diffusion models, researchers strive to achieve optimal transport (OT) plans between distributions. In recent years, a popular approach has emerged in which OT is regularized using entropy, giving stochastic properties to this process and allowing learning one-to-many stochastic mappings with tunable levels of sample diversity. We previously discussed entropic optimal transport (EOT) when we talked about a new benchmark created by a team of researchers from AIRI and Skoltech to test neural methods for its building.

Unfortunately, most existing EOT solvers are numerically unstable if the entropy parameter is too small which is suitable for downstream generative modeling tasks. To solve this problem, part of the same team, in collaboration with colleagues from HSE, proposed a new formulation for the EOT task.

The main idea of the new approach is to represent the transport problem as a search for a saddle point of a functional. In this formulation, researchers have access to the arsenal of generalized functions and variational calculus. The resulting method is called Entropic Neural Optimal Transport (ENOT).

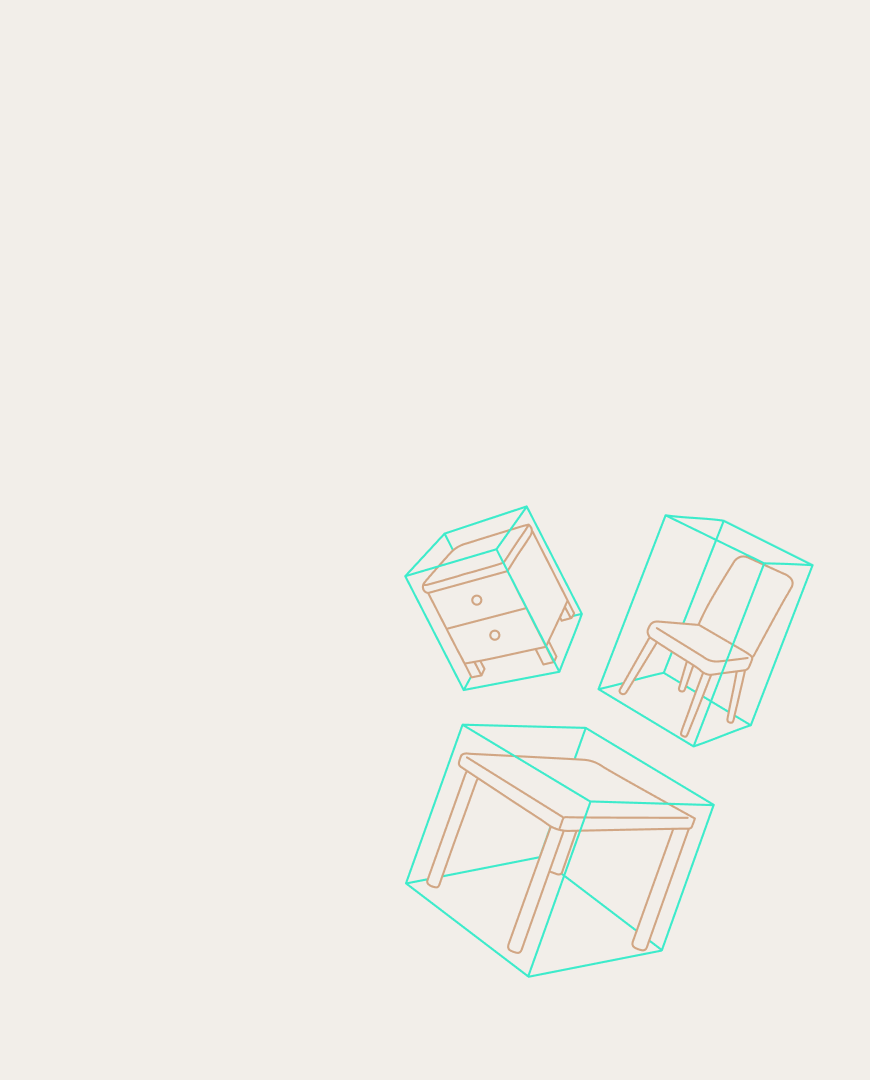

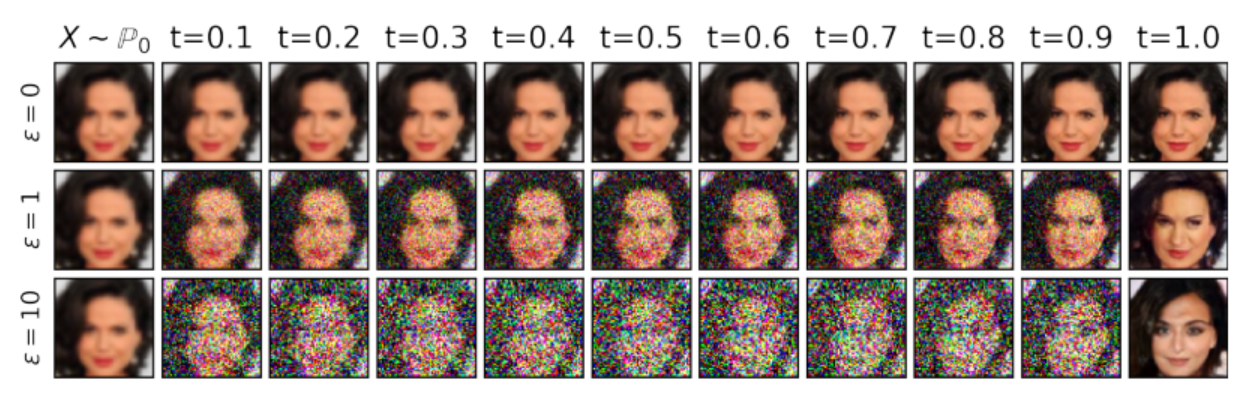

The proposed approach is a scalable way to train neural network models for domain translation using unpaired data. This is a theoretically grounded mechanism for controlling the level of diversity in generated objects while existing methods for solving domain transfer problems are mostly heuristic. The authors confirmed this by experimenting with model distributions, the Colored MNIST dataset, and a set of celebrity faces.

The result of the work of the algorithm in the model problem of super-resolution (deblurring) of celebrity faces

The project code is available on GitHub, and details of the research can be found in the article published in the proceedings of the NeurIPS 2023 conference.